一、实现UDF

需求:添加随机数add_random、去除随机数remove_random

UDF函数中实现evaluate方法。

UDFAddRandom.java

1 | package org.apache.hadoop.hive.ql.udf; |

UDFRemoveRandom.java

1 | package org.apache.hadoop.hive.ql.udf; |

二、在查询中使用函数

临时函数

将包含函数的jar包上传到服务器上,我的存放目录是

/home/hadoop/lib开启hive会话,执行以下命令添加jar:

1

2

3hive (hive)> add jar /home/hadoop/lib/ruozedata-hive-1.0.jar;

Added [/home/hadoop/lib/ruozedata-hive-1.0.jar] to class path

Added resources: [/home/hadoop/lib/ruozedata-hive-1.0.jar]执行以下命令创建名为add_random的临时函数:

1

2

3hive (hive)> create temporary function add_random as 'com.ruozedata.hive.udf.UDFAddRandom';

OK

Time taken: 0.025 secondsremove_random同理。

使用函数

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37hive (hive)> select * from emp;

OK

emp.empno emp.ename emp.job emp.mgr emp.hiredate emp.sal emp.comm emp.deptno

7369 SMITH CLERK 7902 1980-12-17 800.0 NULL 20

7499 ALLEN SALESMAN 7698 1981-2-20 1600.0 300.0 30

7521 WARD SALESMAN 7698 1981-2-22 1250.0 500.0 30

7566 JONES MANAGER 7839 1981-4-2 2975.0 NULL 20

7654 MARTIN SALESMAN 7698 1981-9-28 1250.0 1400.0 30

7698 BLAKE MANAGER 7839 1981-5-1 2850.0 NULL 30

7782 CLARK MANAGER 7839 1981-6-9 2450.0 NULL 10

7788 SCOTT ANALYST 7566 1987-4-19 3000.0 NULL 20

7839 KING PRESIDENT NULL 1981-11-17 5000.0 NULL 10

7844 TURNER SALESMAN 7698 1981-9-8 1500.0 0.0 30

7876 ADAMS CLERK 7788 1987-5-23 1100.0 NULL 20

7900 JAMES CLERK 7698 1981-12-3 950.0 NULL 30

7902 FORD ANALYST 7566 1981-12-3 3000.0 NULL 20

7934 MILLER CLERK 7782 1982-1-23 1300.0 NULL 10

Time taken: 0.331 seconds, Fetched: 14 row(s)

hive (hive)> select ename,add_random(ename) from emp;

OK

ename _c1

SMITH SMITH_8

ALLEN ALLEN_5

WARD WARD_1

JONES JONES_0

MARTIN MARTIN_0

BLAKE BLAKE_9

CLARK CLARK_5

SCOTT SCOTT_7

KING KING_8

TURNER TURNER_6

ADAMS ADAMS_6

JAMES JAMES_2

FORD FORD_6

MILLER MILLER_0

Time taken: 0.882 seconds, Fetched: 14 row(s)

hive (hive)>这个UDF只在当前会话窗口生效,当您关闭了窗口此函数就不存在了;

如果您想在当前窗口将这个UDF清理掉,请依次执行以下两个命令:

1

2drop temporary function if exists add_random;

delete jar /home/hadoop/lib/ruozedata-hive-1.0.jar;删除后再使用add_random会报错:

1

2

3

4

5

6

7hive (hive)> drop temporary function if exists add_random;

OK

Time taken: 0.024 seconds

hive (hive)> delete jar /home/hadoop/lib/ruozedata-hive-1.0.jar;

Deleted [/home/hadoop/lib/ruozedata-hive-1.0.jar] from class path

hive (hive)> select ename,add_random(ename) from emp;

FAILED: SemanticException [Error 10011]: Invalid function add_random

永久函数

UDF永久生效,并且对所有hive会话都生效

hdfs上创建目录

1

[hadoop@hadoop001 ~]$ hdfs dfs -mkdir /lib/udflib

将jar文件上传到hdfs

1

2

3

4[hadoop@hadoop001 ~]$ hdfs dfs -put /home/hadoop/lib/ruozedata-hive-1.0.jar /lib/udflib

[hadoop@hadoop001 ~]$ hdfs dfs -ls /lib/udflib

Found 1 items

-rw-r--r-- 1 hadoop supergroup 6098 2021-12-28 17:25 /lib/udflib/ruozedata-hive-1.0.jar开启hive会话,执行以下命令添加jar:

1

2

3

4

5

6hive (hive)> create function add_random as 'com.ruozedata.hive.udf.UDFAddRandom'

> using jar 'hdfs:///lib/udflib/ruozedata-hive-1.0.jar';

Added [/tmp/38a5942b-b210-4c46-b700-9a64ae6090b7_resources/ruozedata-hive-1.0.jar] to class path

Added resources: [hdfs:///lib/udflib/ruozedata-hive-1.0.jar]

OK

Time taken: 0.097 seconds函数可以使用,新开hive会话也可使用。

三、整合函数到hive源码中,编译hive

解压src包到相应目录

1

2[hadoop@hadoop001 apache-hive-3.1.2-src]$ pwd

/home/hadoop/software/apache-hive-3.1.2-src把函数放到目录

ql/src/java/org/apache/hadoop/hive/ql/udf下1

2

3[hadoop@hadoop001 apache-hive-3.1.2-src]$ cd ql/src/java/org/apache/hadoop/hive/ql/udf

[hadoop@hadoop001 udf]$ pwd

/home/hadoop/software/apache-hive-3.1.2-src/ql/src/java/org/apache/hadoop/hive/ql/udf修改FunctionRegistry.java

1

2

3

4[hadoop@hadoop001 apache-hive-3.1.2-src]$ cd ql/src/java/org/apache/hadoop/hive/ql/exec

[hadoop@hadoop001 exec]$ pwd

/home/hadoop/software/apache-hive-3.1.2-src/ql/src/java/org/apache/hadoop/hive/ql/exec

[hadoop@hadoop001 exec]$ vi FunctionRegistry.java到相关位置插入添加的函数信息:

1

2

3

4

5

6

7

8

9

10import org.apache.hadoop.hive.ql.udf.UDFAddRandom;

import org.apache.hadoop.hive.ql.udf.UDFRemoveRandom;

.

.

static {

system.registerUDF("add_random", UDFAddRandom.class, false);

system.registerUDF("remove_random", UDFRemoveRandom.class, false);

……

}编译

1

[hadoop@hadoop001 apache-hive-3.1.2-src]$ mvn clean package -Pdist -DskipTests -Dmaven.javadoc.skip=true

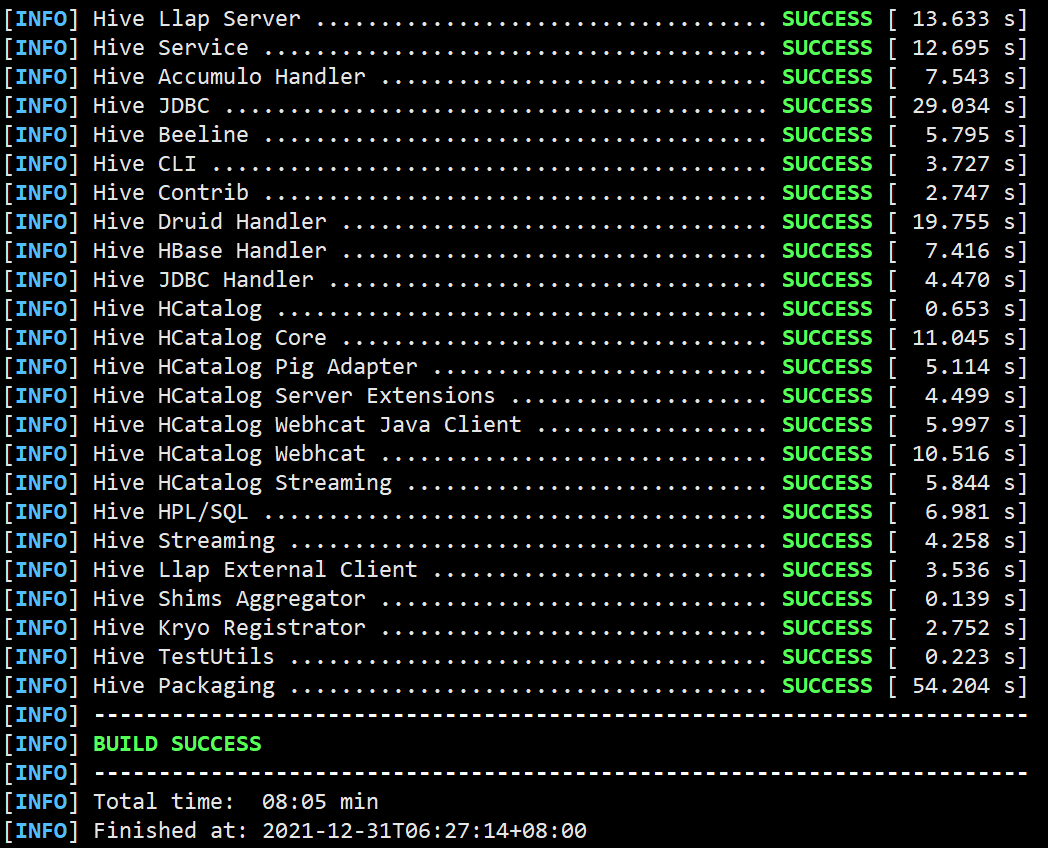

等待编译

目录

packaging/target/下的apache-hive-3.1.2-bin.tar.gz就是我们需要的tar包。(不想重新部署的话可以参考第7步)1

2

3

4

5

6

7

8

9

10

11

12

13[hadoop@hadoop001 apache-hive-3.1.2-src]$ cd packaging/target/

[hadoop@hadoop001 target]$ ll

total 410320

drwxrwxr-x. 2 hadoop hadoop 4096 Dec 31 06:26 antrun

drwxrwxr-x. 3 hadoop hadoop 4096 Dec 31 06:26 apache-hive-3.1.2-bin

-rw-rw-r--. 1 hadoop hadoop 315855613 Dec 31 06:26 apache-hive-3.1.2-bin.tar.gz

-rw-rw-r--. 1 hadoop hadoop 77970637 Dec 31 06:27 apache-hive-3.1.2-jdbc.jar

-rw-rw-r--. 1 hadoop hadoop 26307866 Dec 31 06:27 apache-hive-3.1.2-src.tar.gz

drwxrwxr-x. 4 hadoop hadoop 4096 Dec 31 06:26 archive-tmp

drwxrwxr-x. 3 hadoop hadoop 4096 Dec 31 06:26 maven-shared-archive-resources

drwxrwxr-x. 7 hadoop hadoop 4096 Dec 31 06:26 testconf

drwxrwxr-x. 2 hadoop hadoop 4096 Dec 31 06:26 tmp

drwxrwxr-x. 2 hadoop hadoop 4096 Dec 31 06:26 warehouse部署hive(省略)

检查函数

用

show functions或者desc function 函数名都可以1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25[hadoop@hadoop001 ~]$ hive

which: no hbase in (/home/hadoop/app/hive/bin:/home/hadoop/app/scala/bin:/home/hadoop/app/hadoop/bin:/home/hadoop/app/hadoop/sbin:/home/hadoop/app/protobuf/bin:/home/hadoop/app/maven/bin:/usr/local/mysql/bin:/usr/java/jdk1.8.0_45/bin:/usr/lib64/qt-3.3/bin:/usr/local/bin:/bin:/usr/bin:/usr/local/sbin:/usr/sbin:/sbin)

SLF4J: Class path contains multiple SLF4J bindings.

SLF4J: Found binding in [jar:file:/home/hadoop/software/apache-hive-3.1.2-bin/lib/log4j-slf4j-impl-2.10.0.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: Found binding in [jar:file:/home/hadoop/software/hadoop-3.2.2/share/hadoop/common/lib/slf4j-log4j12-1.7.25.jar!/org/slf4j/impl/StaticLoggerBinder.class]

SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.

SLF4J: Actual binding is of type [org.apache.logging.slf4j.Log4jLoggerFactory]

Hive Session ID = d35e6d35-1d7b-40ae-b86e-3bf09fc0f5d2

Logging initialized using configuration in jar:file:/home/hadoop/software/apache-hive-3.1.2-bin/lib/hive-common-3.1.2.jar!/hive-log4j2.properties Async: true

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

Hive Session ID = 5fca1029-8f4a-4c52-9d22-0b2a0942cc85

hive (default)> desc function add_random;

OK

tab_name

There is no documentation for function 'add_random'

Time taken: 0.078 seconds, Fetched: 1 row(s)

hive (default)> desc function remove_random;

OK

tab_name

There is no documentation for function 'remove_random'

Time taken: 0.049 seconds, Fetched: 1 row(s)

hive (default)>替换jar包

找到我们需要替换的

hive-exec-3.1.2.jar包1

2

3

4[hadoop@hadoop001 lib]$ pwd

/home/hadoop/software/apache-hive-3.1.2-src/packaging/target/apache-hive-3.1.2-bin/apache-hive-3.1.2-bin/lib

[hadoop@hadoop001 lib]$ ll hive-exec-3.1.2.jar

-rw-rw-r--. 1 hadoop hadoop 41063609 Dec 31 06:26 hive-exec-3.1.2.jar进入到正在使用的hive目录下,找到要被替换的包,改名备份

1

2

3

4

5[hadoop@hadoop001 lib]$ pwd

/home/hadoop/software/hive/lib

[hadoop@hadoop001 lib]$ ll hive-exec-3.1.2.jar

-rw-r--r--. 1 hadoop hadoop 40623961 Aug 23 2019 hive-exec-3.1.2.jar

[hadoop@hadoop001 lib]$ mv hive-exec-3.1.2.jar hive-exec-3.1.2.jar_bak替换

1

2

3

4[hadoop@hadoop001 lib]$ cp /home/hadoop/software/apache-hive-3.1.2-src/packaging/target/apache-hive-3.1.2-bin/apache-hive-3.1.2-bin/lib/hive-exec-3.1.2.jar ./

[hadoop@hadoop001 lib]$ ll hive-exec-3.1.2.*

-rw-rw-r--. 1 hadoop hadoop 41063609 Dec 31 06:46 hive-exec-3.1.2.jar

-rw-r--r--. 1 hadoop hadoop 40623961 Aug 23 2019 hive-exec-3.1.2.jar_bak然后重启Hive即可找到函数。

四、Hive源码编译

个人在上面操作的源码编译上遇到好几个坑,又是改仓库又是修改java文件的。

一开始是直接下载hive官网下载地址https://dlcdn.apache.org/hive/hive-3.1.2/的src包。

在目录下执行命令开始编译

1 | mvn clean package -Pdist -DskipTests -Dmaven.javadoc.skip=true |

问题一:pentaho-aggdesigner-algorithm-5.1.5-jhyde.jar缺失

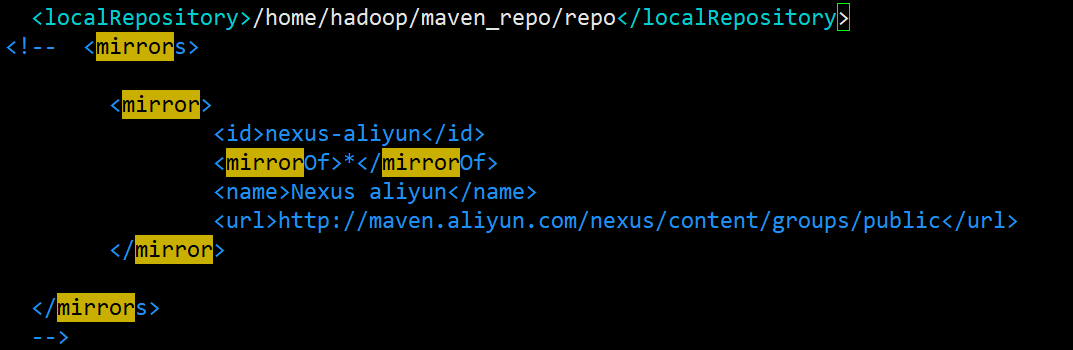

在maven的conf/settings.xml 中添加阿里云仓库地址

1 | [hadoop@hadoop001 ~]$ vi app/maven/conf/settings.xml |

注意:<mirror></mirror>标签要在<mirrors></mirrors>内,我最开始没注意,原来文本下面有个<mirrors>没注释掉

我这里注释掉了,因为后来使用maven仓库下载的,没有用到阿里云。

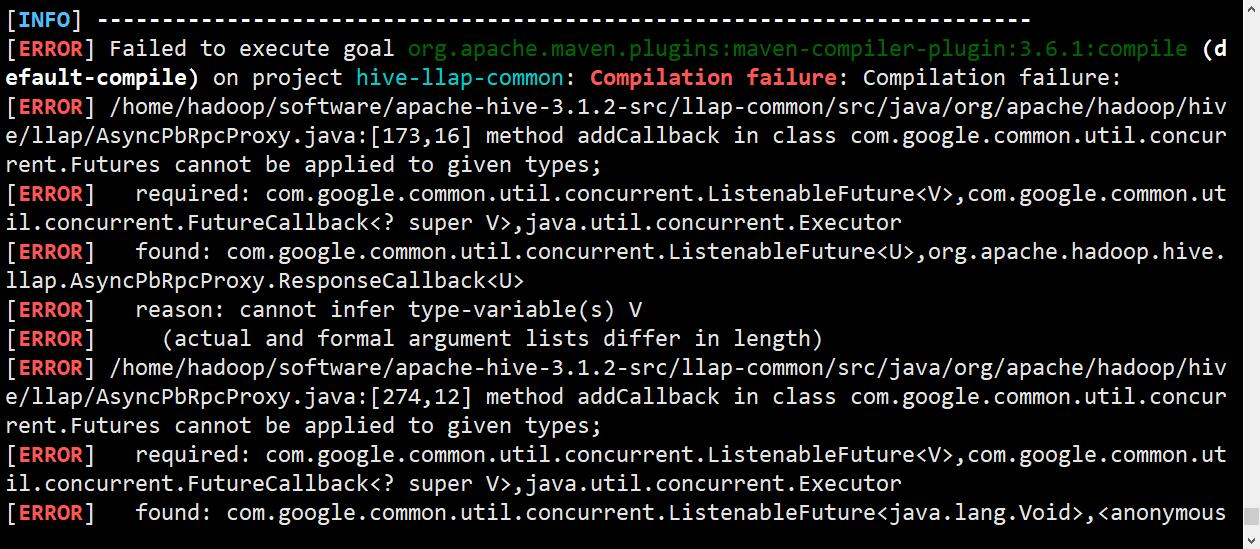

问题二:添加阿里云仓库后,重新编译,依然报错

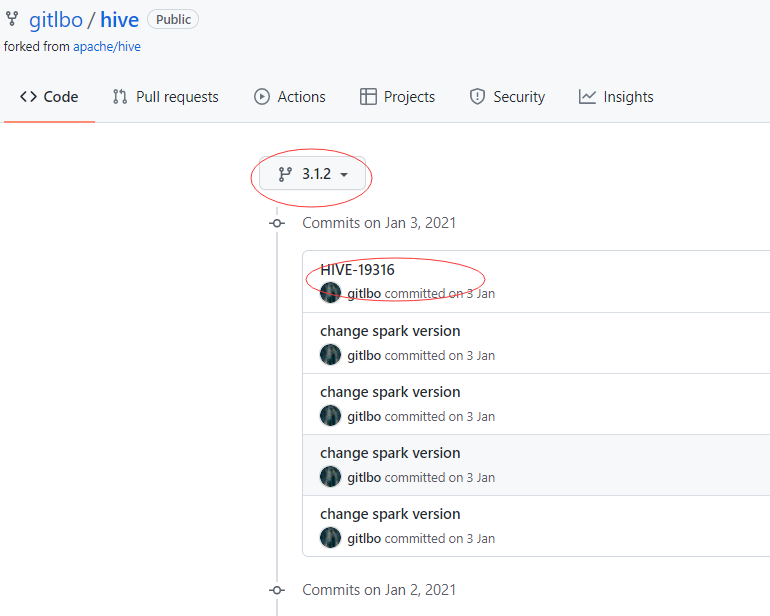

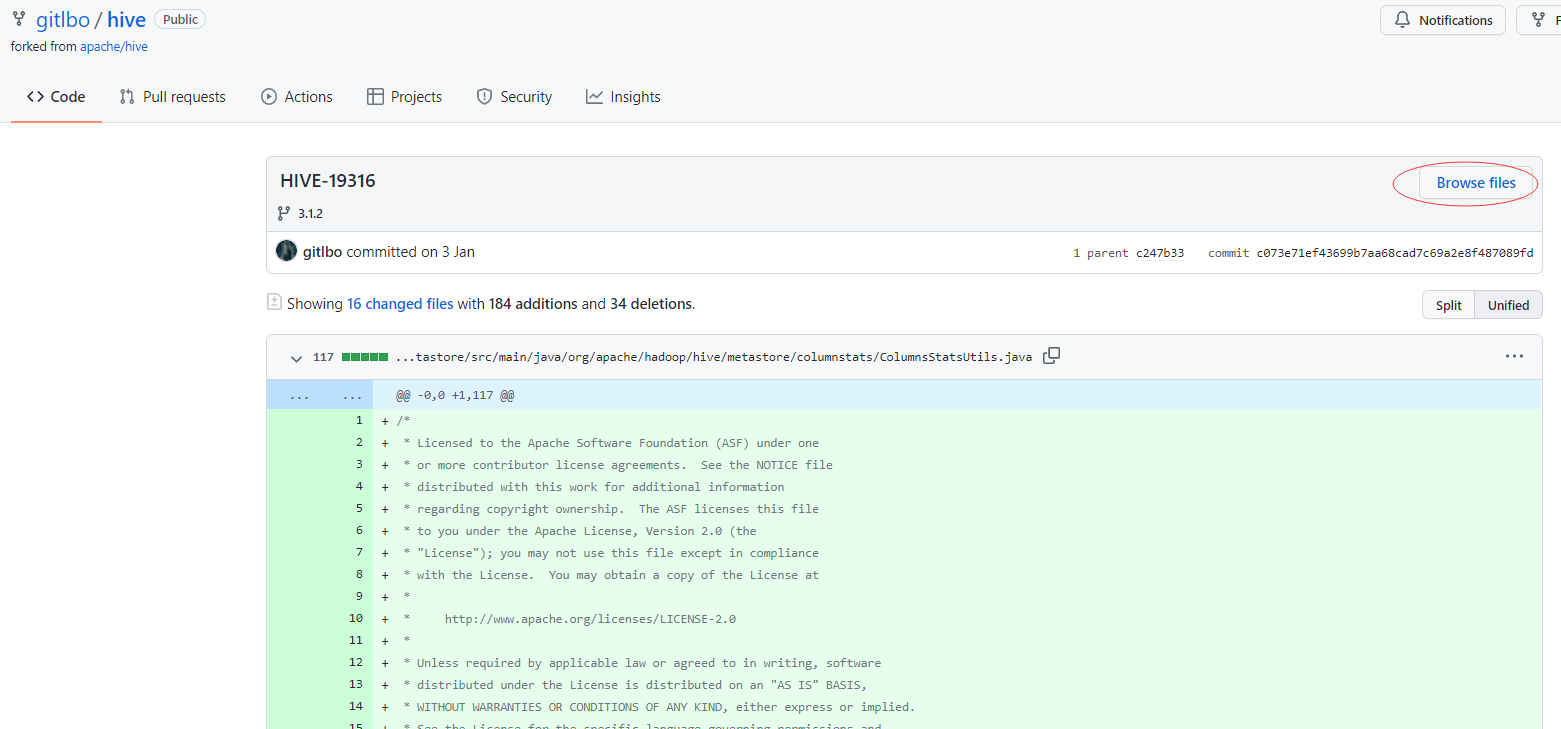

网上搜查发现有同样问题的,然后需要根据错误提示,参考https://github.com/gitlbo/hive/commits/3.1.2修改源码中的某几个类。于是我按步骤修改后,可以编译这个组件了,但又有其他地方报错。

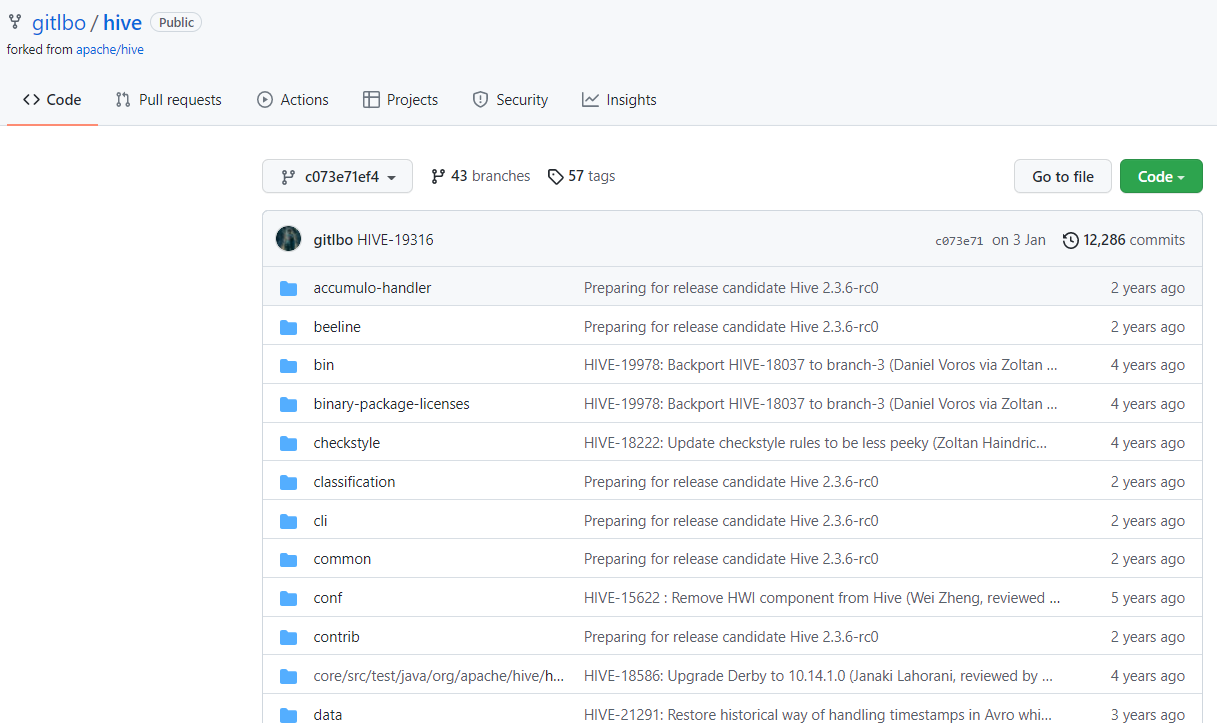

既然都要按照修改,为什么不直接用最新的src包呢,于是我下载了一个新的src包。

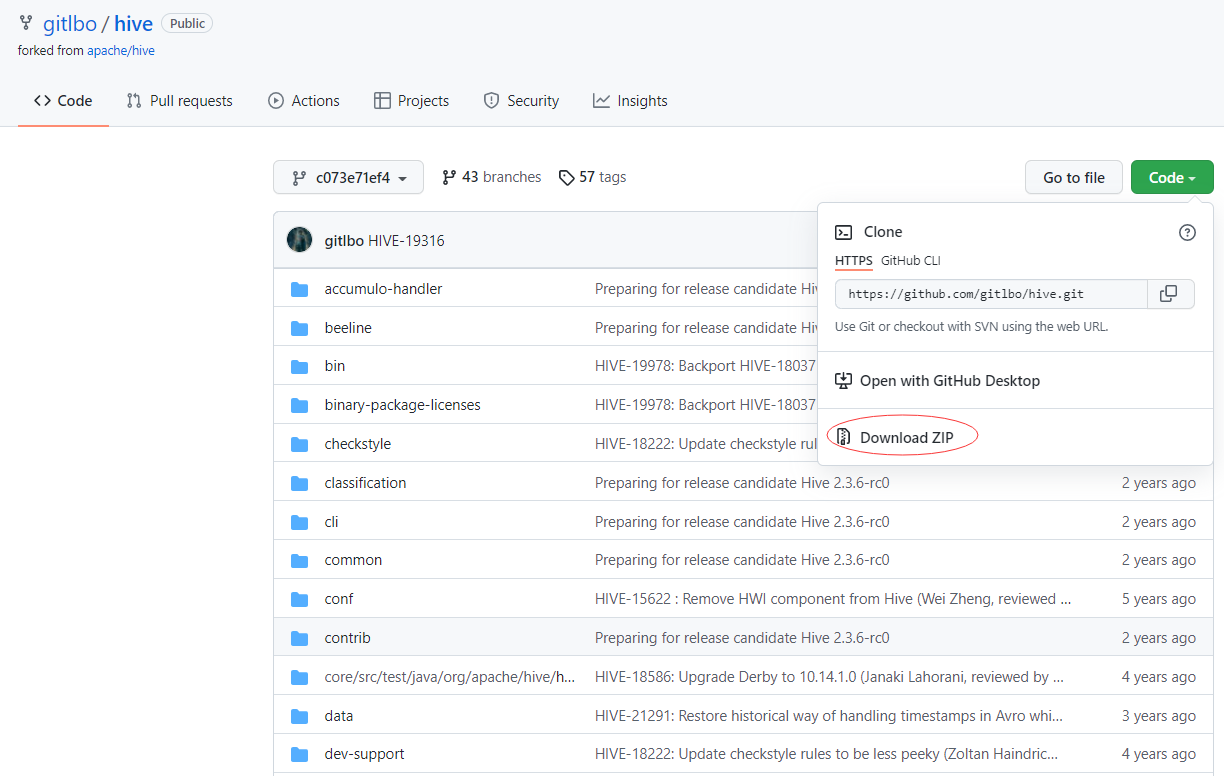

1 | [hadoop@hadoop001 software]$ wget -O apache-hive-3.1.2-src.zip https://codeload.github.com/gitlbo/hive/zip/c073e71ef43699b7aa68cad7c69a2e8f487089fd |

然后解压,修改pom.xml里的hadoop.version为我的版本3.2.2.然后其他根据个人需要修改。

使用命令编译mvn clean package -Pdist -DskipTests -Dmaven.javadoc.skip=true,没有报错,编译成功。

1 | [hadoop@hadoop001 apache-hive-3.1.2-src]$ cd packaging/target/ |