将添加随机数,去除随机数的UDF整合到源码中,并在IDEA的终端中完成测试:

ql/src/java/org/apache/hadoop/hive/ql/udf/UDFAddRandom.java

1 | package org.apache.hadoop.hive.ql.udf; |

ql/src/java/org/apache/hadoop/hive/ql/udf/UDFRemoveRandom.java

1 | package org.apache.hadoop.hive.ql.udf; |

修改ql/src/java/org/apache/hadoop/hive/ql/exec/FunctionRegistry.java

1 | import org.apache.hadoop.hive.ql.udf.UDFAddRandom; |

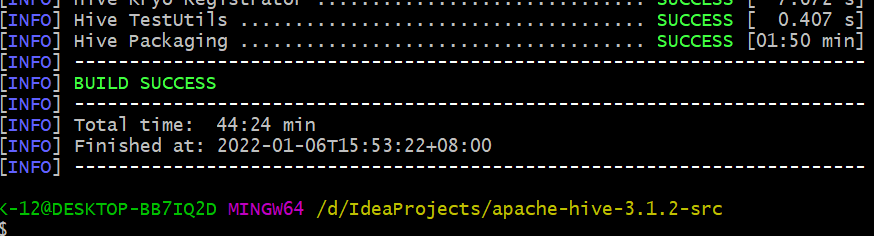

在WINDOWS上部署maven,编译hive

编译好的整个文件夹导入到idea中

使用快捷键Ctrl+Alt+shift+S打开项目的jdk配置,把内存改大点再rebuild

rebuild,期间如有报错,按提示修改,最终Build completed successfully

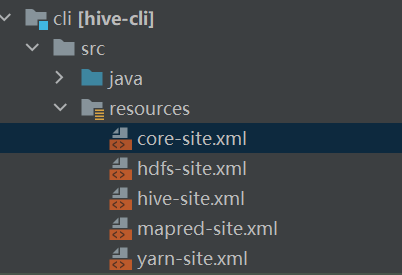

设置hive-site.xml与服务器上的一样,CliDriver目录下的resources文件夹(需要自己手动创建)

简单做法:只有一个hive-site.xml也可以了

hive-site.xml:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?><!--

Licensed to the Apache Software Foundation (ASF) under one or more

contributor license agreements. See the NOTICE file distributed with

this work for additional information regarding copyright ownership.

The ASF licenses this file to You under the Apache License, Version 2.0

(the "License"); you may not use this file except in compliance with

the License. You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

-->

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop001:3306/hive?createDatabaseIfNotExist=true&useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

<description>Driver class name for a JDBC metastore</description>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

<description>Username to use against metastore database</description>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>ruozedata001</value>

<description>password to use against metastore database</description>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

<description>location of default database for the warehouse</description>

</property>

<property>

<name>hive.cli.print.header</name>

<value>true</value>

</property>

<property>

<name>hive.cli.print.current.db</name>

<value>true</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>hadoop001</value>

</property>

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.metastore.uris</name>

<value>thrift://hadoop001:9083</value>

</property>

</configuration>复杂做法:5个都放进去

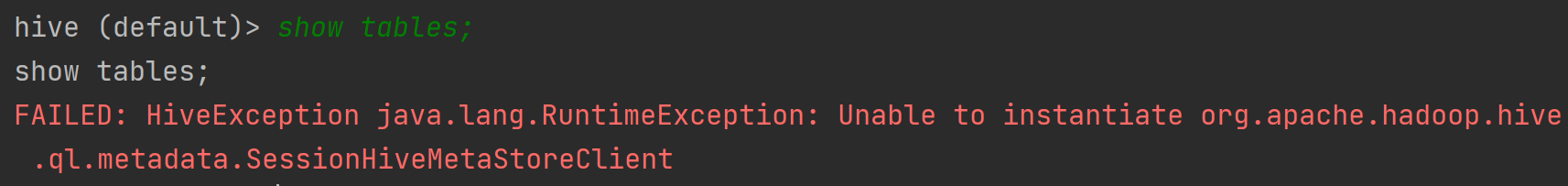

metastore

在hive-site.xml中添加

hive.metastore.uris:thrift://hadoop001:90831

2

3

4<property>

<name>hive.metastore.uris</name>

<value>thrift://hadoop001:9083</value>

</property>在服务器上执行

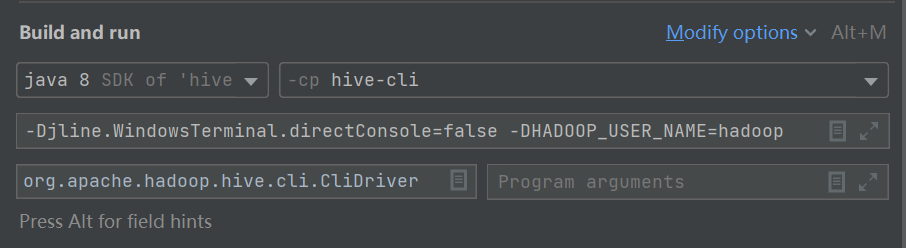

hive --service metastore启动服务端metastore修改运行环境,IDEA 的 VM 添加 :

设置系统属性jline.WindowsTerminal.directConsole为false,控制台才能接受输入,否则输入命令后回车没有反应:

-Djline.WindowsTerminal.directConsole=false修改系统用户名称,否则无权限访问hdfs:

-DHADOOP_USER_NAME=hadoop

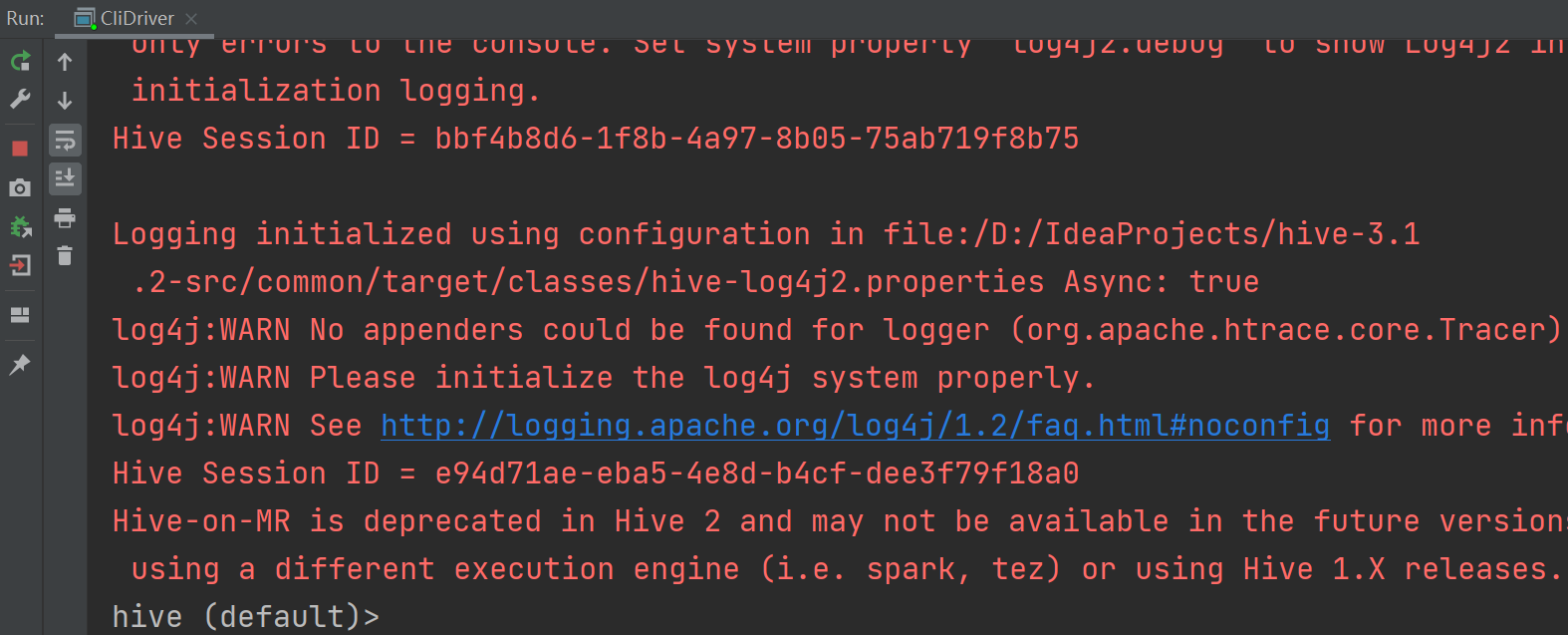

运行入口文件CliDriver.java

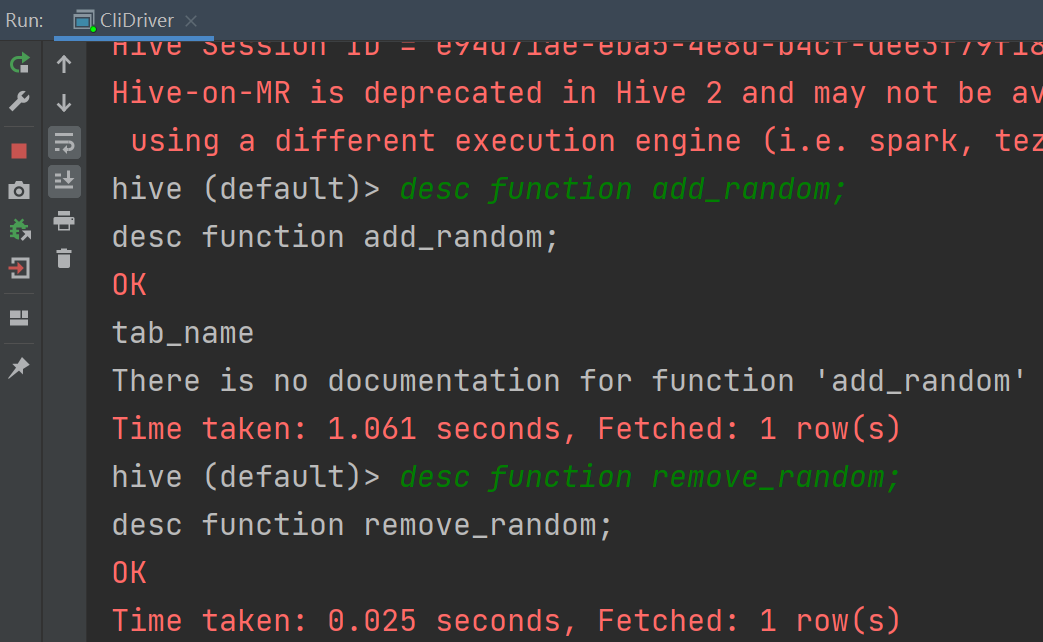

验证函数是否存在;

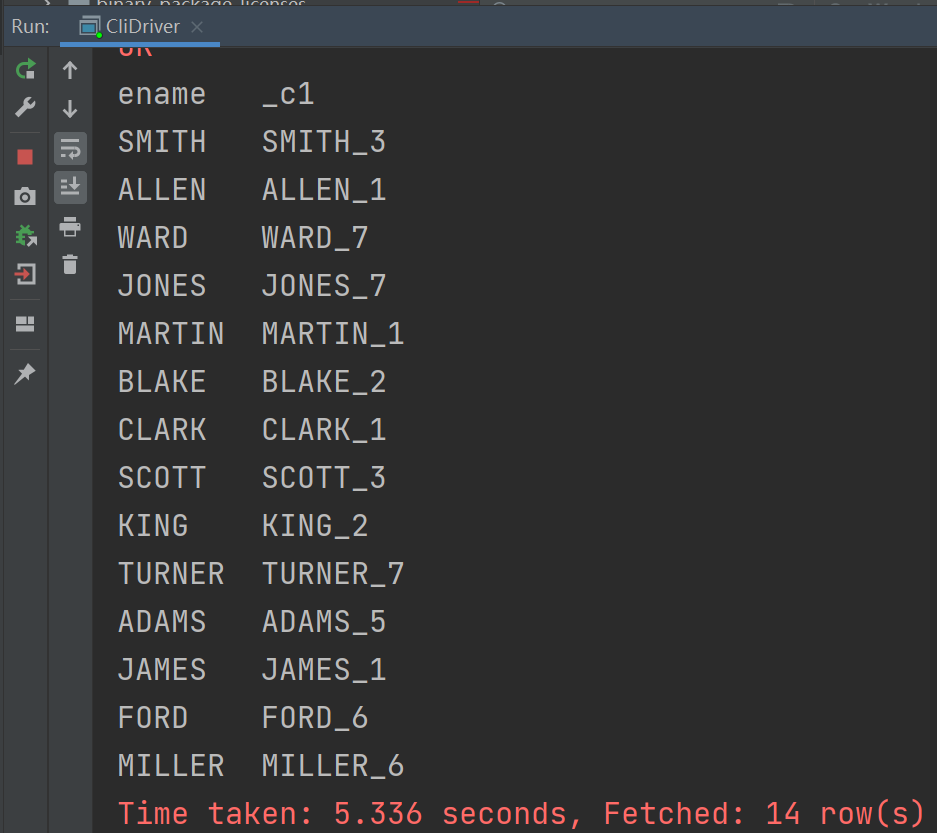

测试使用:

add_ramdom:

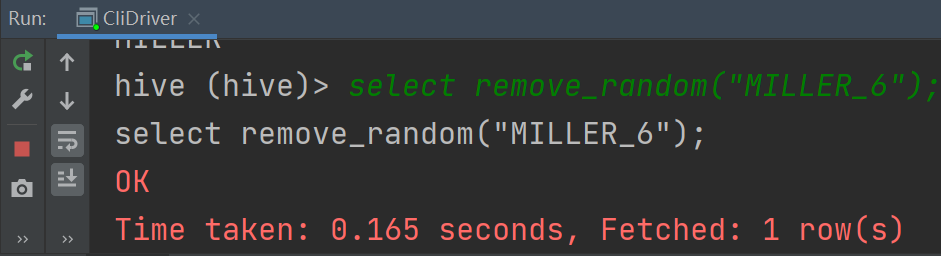

remove_random: